Wondering what is video encoding and why it’s important?

In this article, we’ll examine the process of encoding, codecs and compression techniques. This includes what makes for a recommended codec, although is situation dependent. It also covers why certain artifacts, related to compression, might appear in your video. As a result, you’ll walk away with a better understanding of this process and how it relates to adaptive bitrate streaming.

- What is video encoding?

- Why is encoding important?

- What are codecs?

- What’s the best video codec?

- What’s the best audio codec?

- So what are the recommended codecs?

- Compression techniques

- Image resizing

- Interframe and video frames

- Chroma subsampling

- Altering frame rates

What is video encoding?

Video encoding is the process of compressing and potentially changing the format of video content, sometimes even changing an analog source to a digital one. In regards to compression, the goal is so that it consumes less space. This is because it’s a lossy process that throws away information related to the video. Upon decompression for playback, an approximation of the original is created. The more compression applied, the more data is thrown out and the worse the approximation looks versus the original.

Why is encoding important?

Now there are two reasons why video encoding is important. The first, especially as it relates to streaming, is it makes it easier to transmit video over the Internet. This is because compression reduces the bandwidth required, while at the same time giving a quality experience. Without compression, raw video content would exclude many from being able to stream content over the Internet due to normal connection speeds not being adequate. The important aspect is bit rate, or the amount of data per second in the video. For streaming, this will dictate if they can easily watch the content or if they will be stuck buffering the video.

The second reason for video encoding is compatibility. In fact, sometimes content is already compressed to an adequate size but still needs to be encoded for compatibility, although this is often and more accurately described as transcoding. Being compatible can relate to certain services or programs, which require certain encoding specifications. It can also include increasing compatibility for playback with audiences.

The process of video encoding is dictated by video codecs, or video compression standards.

What are codecs?

Video codecs are video compression standards done through software or hardware applications. Each codec is comprised of an encoder, to compress the video, and a decoder, to recreate an approximate of the video for playback. The name codec actually comes from a merging of these two concepts into a single word: enCOder and DECoder.

Example video codecs include H.264, VP8, RV40 and many other standards or later versions of these codecs like VP9. Although these standards are tied to the video stream, videos are often bundled with an audio stream which can have its own compression standard. Examples of audio compression standards, often referred to as audio codecs, include LAME/MP3, Fraunhofer FDK AAC, FLAC and more.

These codecs should not be confused with the containers that are used to encapsulate everything. MKV (Matroska Video), MOV (short for MOVie), AVI (Audio Video Interleave) and other file types are examples of these container formats. These containers do not define how to encode and decode the video data. Instead, they store bytes from a codec in a way that compatible applications can playback the content. In addition, these containers don’t just store video and audio information, but also metadata. This can be confusing, though, as some audio codecs have the same names as file containers, such as FLAC.

What’s the best video codec?

That’s a loaded question which can’t be directly answered without more information. The reason is that different video codecs are best in certain areas.

For high quality video streaming over the Internet, H.264 has become a common codec, estimated to make up the majority of multimedia traffic. The codec has a reputation for excellent quality, encoding speed and compression efficiency, although not as efficient as the later HEVC (High Efficiency Video Coding, also known as H.265) compression standard. H.264 can also support 4K video streaming, which was pretty forward thinking for a codec created in 2003.

As noted, though, a more advanced video compression standard is already available in HEVC. This codec is more efficient with compression, which would allow more people to watch high quality video on slower connections. It’s not alone either. In 2009, Google purchased On2, giving them control of the VP8 codec. While this codec wasn’t able to take the world by storm, it was improved upon and a new codec, dubbed VP9, was released. Netflix tested these later formats versus H.264, using 5,000 12 second clips from their catalog. From this, they found that both of the codecs were able to reduce bitrate sizes by 50% and still achieve similar quality to H.264. Of the two, HEVC outperformed VP9 for many resolutions and quality metrics. The exception was at 1080p resolution, which was either close and in some scenarios had VP9 as more efficient.

By these tests, wouldn’t this make HEVC the best codec? While technically it’s superior to H.264, it overlooks a key advantage of the older codec: compatibility. H.264 is widely supported across devices, for example it wasn’t until iOS 11 in late 2017 that iPhones could support HEVC. As a result, despite not being as advanced, H.264 is still favored in a lot of cases in order to reach a broader audience for playback.

Note, the H.264 codec is also sometimes called X.264. However, this is not the same codec but actually a free equivalent of the codec versus the licensed H.264 implementation.

What’s the best audio codec?

Like video, different audio codecs excel at different things. AAC (Advanced Audio Coding) and MP3 (MPEG-1 Audio Layer 3) are two lossy formats that are widely known among audio and video enthusiasts. Given that they are lossy, these formats, in essence, delete information related to the audio in order to compress the space required. The job of this compression is to strike the right balance, where a sufficient amount of space is saved without notably compromising the audio quality.

Now both of these audio coding methods have been around for awhile. MP3 originally came on the scene in 1993, making waves for reducing the size of an audio file down to 10% versus uncompressed standards at the time, while AAC was first released in 1997. Being a later format, it’s probably not surprising that AAC is more efficient at compressing audio quality. While the exact degree to that statement has been debated, even the creators of the MP3 format, The Fraunhofer Institute for Integrated Circuits, have declared AAC the “de facto standard for music download and videos on mobile phones”… although the statement conveniently happened after some of their patents for MP3s expired (and also led to a bizarre number of stories claiming MP3 was now dead, an unlikely outcome from this). So while MP3 has much more milage with device compatibility to this day, AAC benefits from superior compression and is a preferable method for streaming video content of the two. Not only that but a lot of delivery over mobile devices, when related to video, depends on the audio being AAC. IBM’s video streaming and enterprise video streaming offerings are an example of that, although can transcode the audio to meet these specifications if needed.

Now AAC and MP3 are far from the only formats for digital audio. There are many other examples, both lossy like WMA (Windows Media Audio) and lossless like APAC (Apple Lossless Audio Codec). One of these formats is FLAC (Free Lossless Audio Codec), which is lossless. This means the original audio data can be perfectly reconstructed from the compressed data. While the size of the audio track is smaller than WAV (Waveform Audio File Format), an uncompressed format, it still requires notably more data for an audio stream compared to lossy formats like AAC and MP3. As a result, while loseless is seen on physical media like Blu-rays, it’s less common for streaming where size is important.

So what are the recommended codecs?

Favoring compatibility, H.264 and AAC are widely used, IBM’s video streaming and enterprise video streaming offerings support both the H.264 video codec and the AAC audio codec for streaming. While neither is cutting edge, both can produce high quality content with good compression applied. In addition, video content compressed with these codecs can reach large audiences, especially over mobile devices.

Compression techniques

Now that we have examined codecs a bit, let’s look into a few compression techniques. These techniques are utilized by codecs to intelligently reduce the size of video content. The goal is to do so without hugely impacting video quality. That said, certain techniques are more noticeable to the end viewer than others.

Image resizing

A common technique for compression is resizing, or reducing the resolution. This is because the higher the resolution of a video, the more information that is included in each frame. For example, a 1280×720 video has the potential for 921,600 pixels in each frame, assuming it’s an i-frame (more on this in a bit). In contrast, a 640×360 video has the potential for 230,400 pixels per frame.

So one method to reduce the amount of data is to “shrink” the image size and then resample. This will create fewer pixels, reducing the level of detail in the image at the benefit of decreasing the amount of information needed. This concept has become a cornerstone to adaptive bitrate streaming. This is the process of having multiple quality levels for a video, and it’s common to note these levels based on the different resolutions that are created.

An artifact of resizing can be the appearance of “pixilation”. This is sometimes called macroblocking, although usually this is more pronounced than mere pixilation. In general this is a phenomena where parts of an image look blocky. This can be a combo of a low resolution image and interframe, where details in the video are actually changing but areas of the video as part of the interframe process are being kept. While this is reducing the amount of data the video requires, it’s coming at the cost of video quality.

Note that this resizing process is sometimes referred to as scaling as well. However, scaling sometimes takes on an uncompressed meaning. For example, sometimes it’s used to describe taking the same size image and presenting it in a smaller fashion. In these scenarios, the number of pixels aren’t changing and so there is no compression being applied.

Interframe and video frames

One video compression technique that might not be widely realized is interframe. This is a process that reduces “redundant” information from frame to frame. For example, a video with an FPS (frames per second) of 30 means that one second of video equals 30 frames, or still images. When played together, they simulate motion. However, chances are elements from frame to frame within those 30 frames (referred to as GOP, Group of Pictures) will remain virtually the same. Realizing this, interframe was introduced to remove redundant data. Essentially reducing data that would be used to convey that an element has not changed across frames.

To better understand this concept, let’s visualize it.

Here is a still image of volleyball being thrown and spiked. There is quite a bit of movement in this example, with the volleyball being thrown up, the ball being spiked, sand flying and elements moving from the wind like trees and water. Regardless, there are parts that can be reused, specifically areas of the sky. These are seen in the blocked off area, as these elements don’t change. So rather than spend valuable data to convey that parts of the sky hasn’t changed, they are simply reused to conserve space. As a result, only the below element of the video is actually changing between frames in this series.

This technique, built into video codecs like H.264, explains why videos with higher motion take up more data or look worse when heavy compression is applied. To execute the technique, the process utilizes three different types of frames to achieve this: i-frames, p-frames and b-frames.

I-frame

Also known as a keyframe, this is a full frame image of the video. How often the i-frame appears depends on how it was encoded. Encoders, like the Telestream Wirecast and vMix, will allow you to select the keyframe interval. This will note how often an i-frame will be created. The more often an i-frame is created, the more space this requires. However, there are benefits to doing periodic i-frames every 2 seconds, the largest of these is due to adaptive streaming, which can only change quality settings on an i-frame.

P-frame

Short for predictive frame, this is a delta frame that only contains some of the image. It will look backwards toward an i-frame or another p-frame to see if part of the image is the same. If so, that portion will be excluded to save space.

B-frame

Short for bi-directional predictive frame, this is a delta frame that also only contains some of the image. The difference between this and a p-frame, though, is that it can look backwards or forwards for other delta frames or i-frames when choosing what details to leave out as they exist in another frame. As a result, b-frames offer improved compression without detracting from the viewing experience. However, they do require a higher encoding profile.

For more details on this entire process, check out our Keyframes, InterFrame & Video Compression article.

Chroma subsampling

Ever seen a gradient (where the colors slowly transition from one to the other) that had a harsh, unnatural look to it? This might be the result of reducing the amount of colors in the image.

As might be expected, the more color information that is required to represent an image, the more space it’ll take. As a result, one way to compress data for video is to discard some of this color information. This is a process called chroma subsampling. The principal idea behind it is that the human eye has a much easier time detecting differences in luminance than chroma information. As a result, it tries to preserve the luminance while just sacrificing color quality.

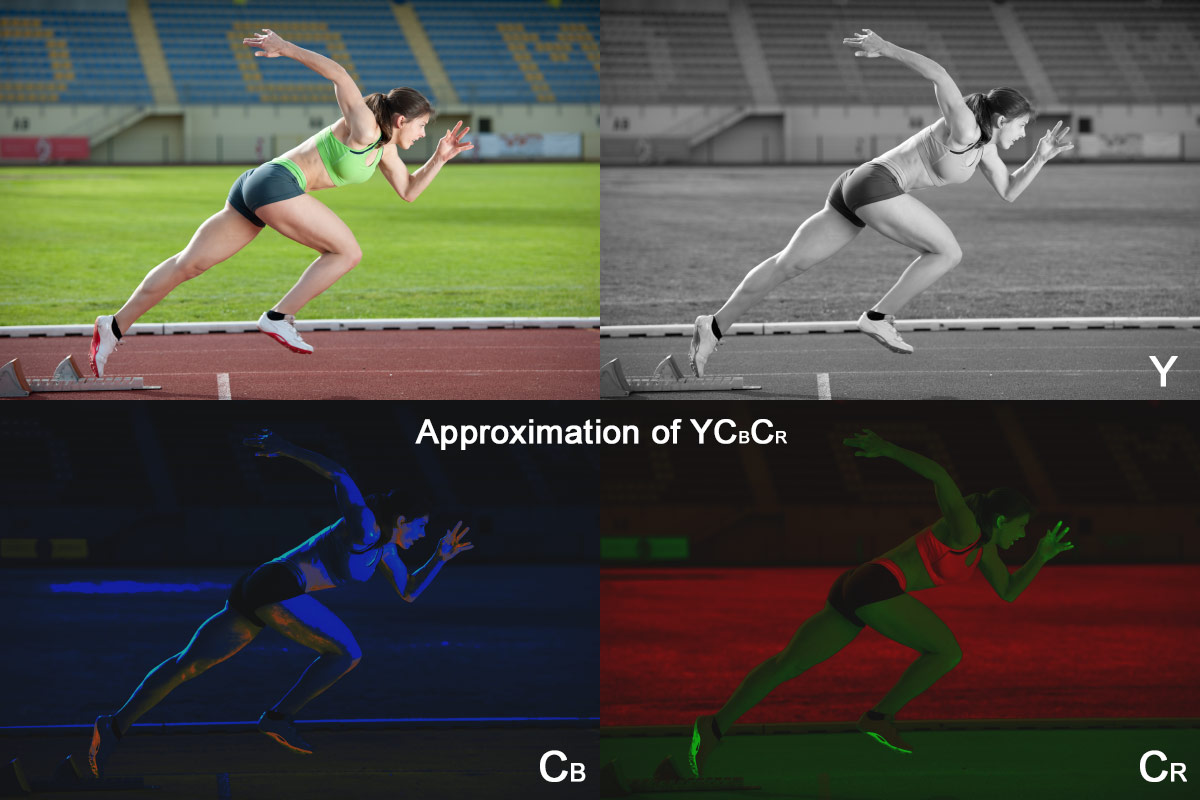

In a nutshell, this is achieved through converting a RGB (Red, Green, Blue) spectrum into YCBCR. In doing this, luminance is separated (noted as “Y”) and can be delivered uncompressed. Meanwhile, chroma information is contained in CBCR, with the potential to reduce them to save space. This is a hard process to describe, but essentially think of the “Y” as a grayscale version of the image. This is overlapped with a CB “version”, which will contain shades of yellow and blue, and a CR “version”, that will contain shades of green and red.

Add all of these elements together and you have a complete, colored image.

When it comes to compressing this, it’s generally noted in a fashion similar to 4:2:2 versus an uncompressed version at 4:4:4. In the latter example, chroma subsampling hasn’t been applied and each has the same sample rate, so no compression is applied. Now each of the numbers lines up with an area of YCBCR. So 4:2:2 means that the grey scale version (Y) is not being compressed as it’s still 4, while CB and CR are each being reduced to 2 so they have half the sample rate as the luminance. As a result, the amount of bandwidth required is being dropped by 33% ([4+2+2] / [4+4+4] = 66% data versus the original). Other variants exist, for example 4:1:1, which reduces the bandwidth even more, and 4:2:0, which actually alternates betweenCB and CR on horizontal lines to compress.

Altering frame rates

Another method to compress video is to reduce the amount of frames per second. This reduces the amount of data that a video will require, as less information is needed to convey each second. This can be a bit more destructive than other methods, though, as lowering the frame rate too much will result in video that lacks fluid movement.

Summary

Now one should know the basics of encoding and why it’s done. They should also walk away knowing how content is compressed while not hugely impacting perceived quality. Action items can include using recommended codecs and setting a few elements that are better suited for your distribution method. An example of the latter would be a keyframe interval of 2 seconds to be mindful of adaptive streaming.

Ready to take your broadcasting to the next step? If so, be sure to also check out The Definitive Guide to Enterprise Video eBook to understand what enterprise video can do for you, how fast it is growing and what requirements make it agile and cost efficient.

Note that we did not cover interlacing in this article. The reason being, even though this is a compression technique, is that it’s not widely used on content streamed over the Internet, and is something found more in traditional televised content. That said, for those curious on it and also deinterlacing, i.e. trying to reverse the process, please reference this Interlaced Video & Deinterlacing for Streaming article.